Adaptive AI: How Real-Time Model Switching Unlocks Smarter Applications

With dozens of new foundation models and APIs emerging every month,

businesses face a tough challenge: how do you pick the right one at the

moment? Choosing incorrectly means wasted resources, slower responses,

or poor results.

That’s where adaptive AI infrastructure comes in. In practice, adaptive

routing can reduce latency by 20–30% and lower costs significantly

without sacrificing quality.

Most discussions about multi-model routing strategies focus on speed,

cost, or reliability, but these only scratch the surface. The real

game-changer is adaptability.

Real-time dynamic model switching, selecting the best model for each

query based on context and intent, is reshaping how AI systems deliver

value. Instead of rigid rules or one-size-fits-all logic, adaptive

systems make smarter decisions on the fly, creating applications that

feel more intelligent, personalized, and reliable.

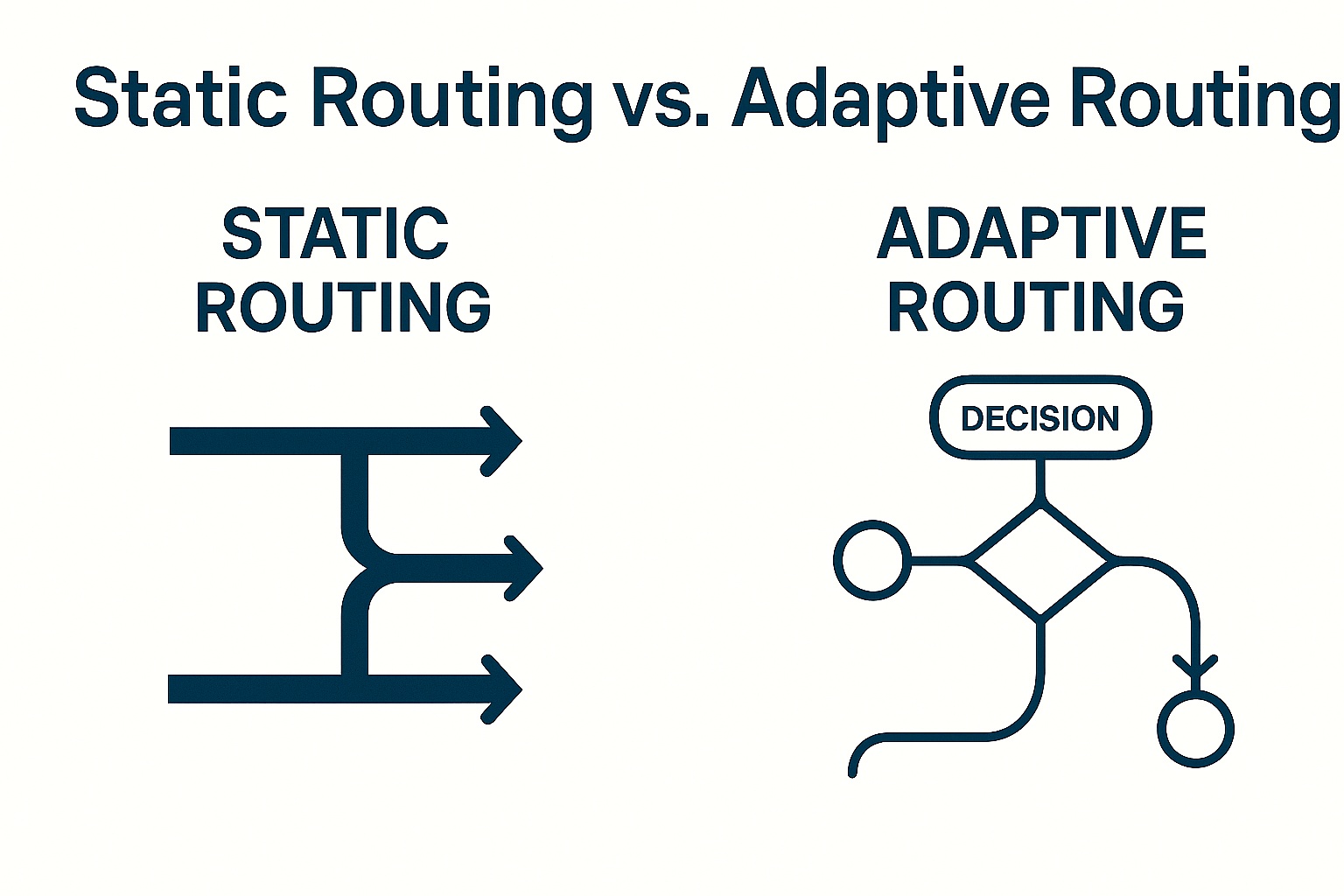

❌ Why Static Routing Falls Short

Traditional multi-model routing often works with fixed logic:

- Use Model A for cost efficiency.

- Use Model B for accuracy.

- Use Model C as a fallback.

This setup works in predictable environments. But real-world usage is rarely so simple. Queries differ dramatically in complexity, urgency, and purpose:

- A customer support query may only need a lightweight model for instant response.

- A legal research task demands a highly accurate, slower, and more expensive model.

- A creative brainstorming request might benefit from multiple models stitched together.

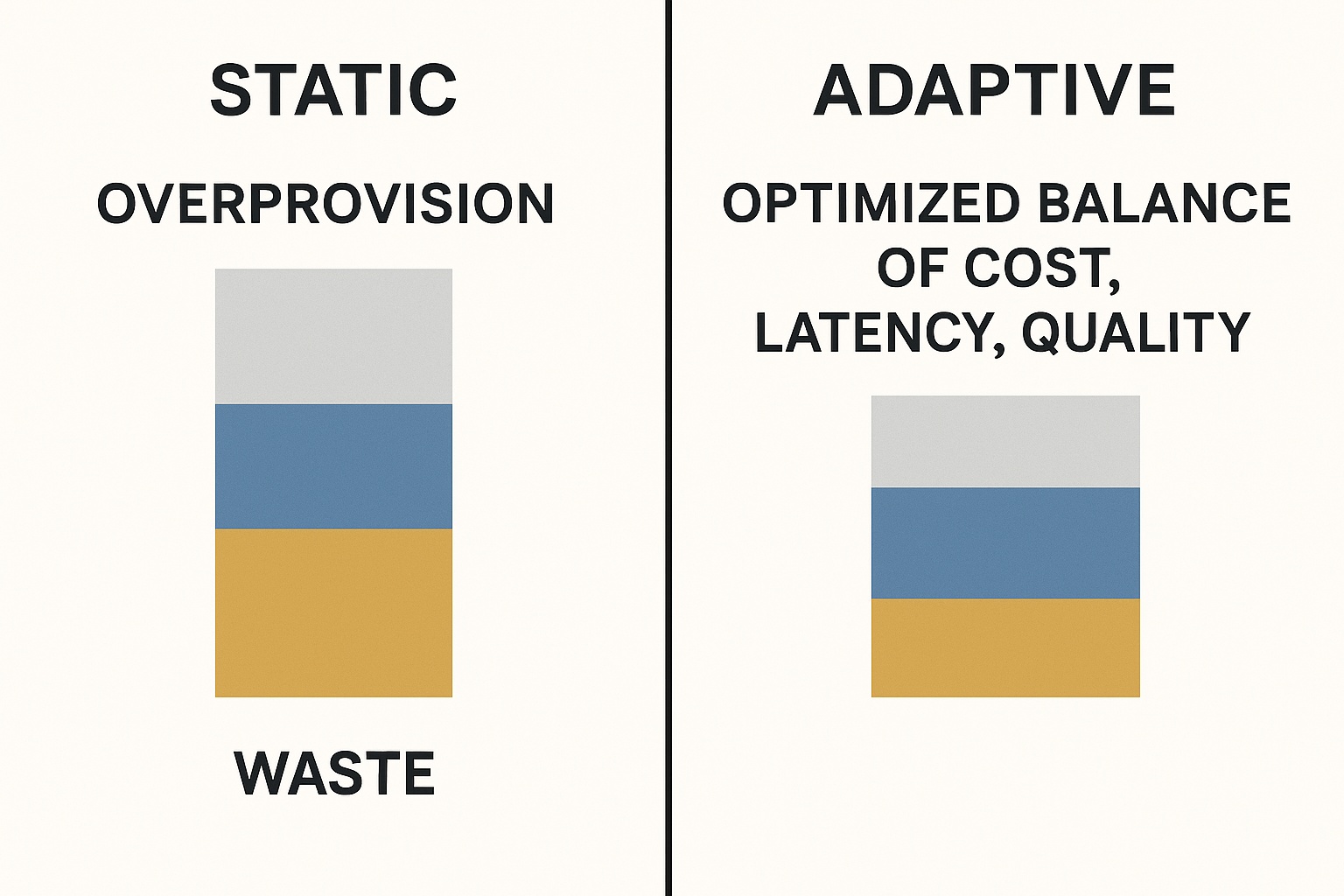

Static rules can’t handle this diversity. The result? Wasted resources (overusing heavyweight models) or unsatisfactory results (using lightweight models where depth is required).

🔄 Real-Time Model Selection

Adaptive AI orchestration, a real-time model selection approach, changes the equation. Instead of relying on pre-set rules, it evaluates each query in real time to decide which model is best suited at that exact moment.

Key signals include:

- Complexity: Simple lookup vs. nuanced reasoning.

- User intent: Does the user value speed, depth, or cost?

- Model health : Which providers are currently fast, reliable, and available?

Routing decisions happen in milliseconds, but the impact is massive. Users get better answers, systems waste fewer resources, and businesses gain adaptive AI infrastructure that responds to real-world conditions.

🌟 Benefits Beyond Speed and Cost

Adaptive routing doesn’t just optimize for performance, it creates smarter, human-like systems:

- Higher output quality : Queries matched to the models that specialize in those domains.

- Personalized experiences: Casual queries answered instantly, high-stakes routed to premium reasoning models.

- Smarter resource allocation:Lightweight models handle routine work, while only complex tasks escalate to expensive LLMs.

💡 Proof point: Adaptive AI can cut model costs by up to 40% while maintaining (or even improving) output quality.

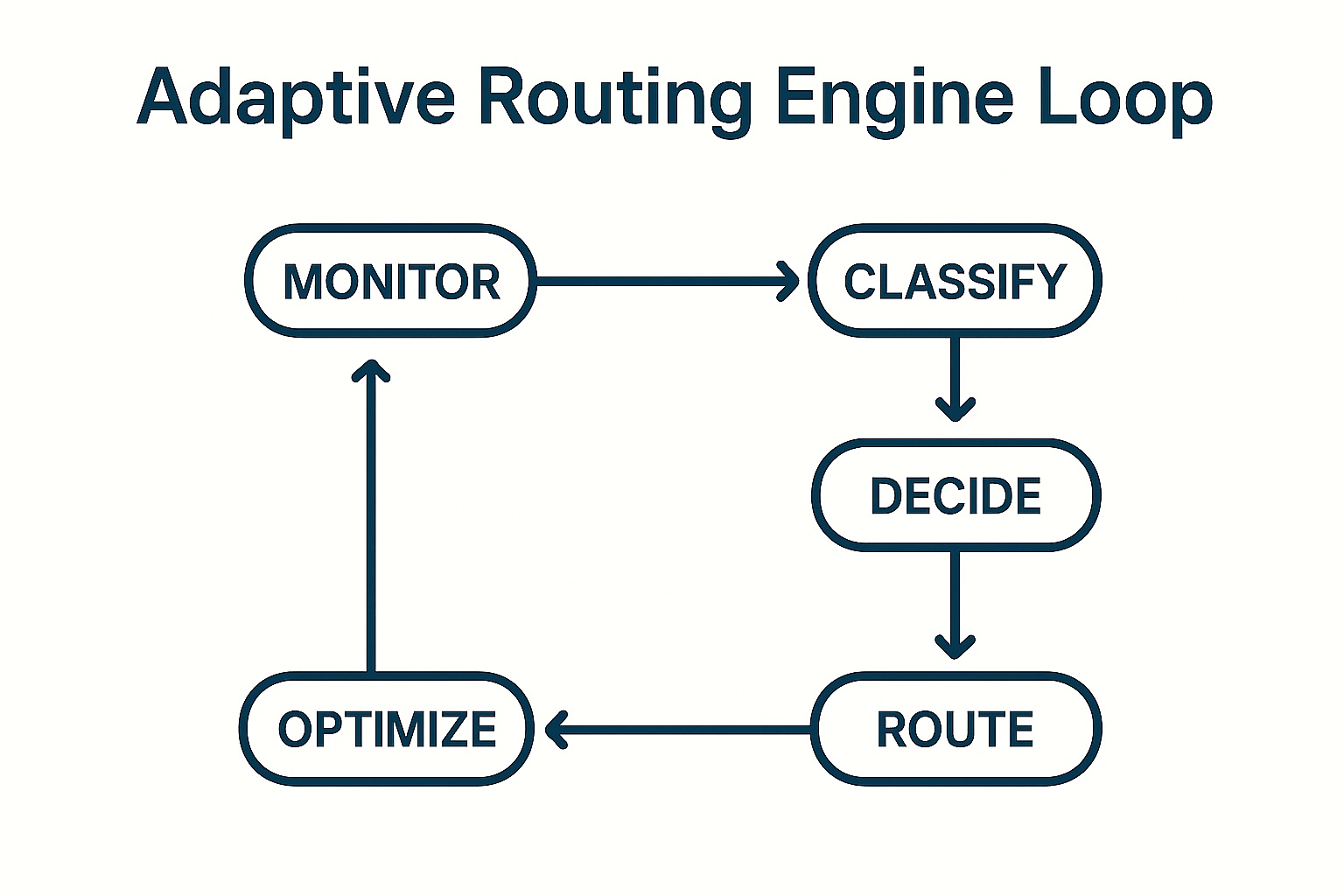

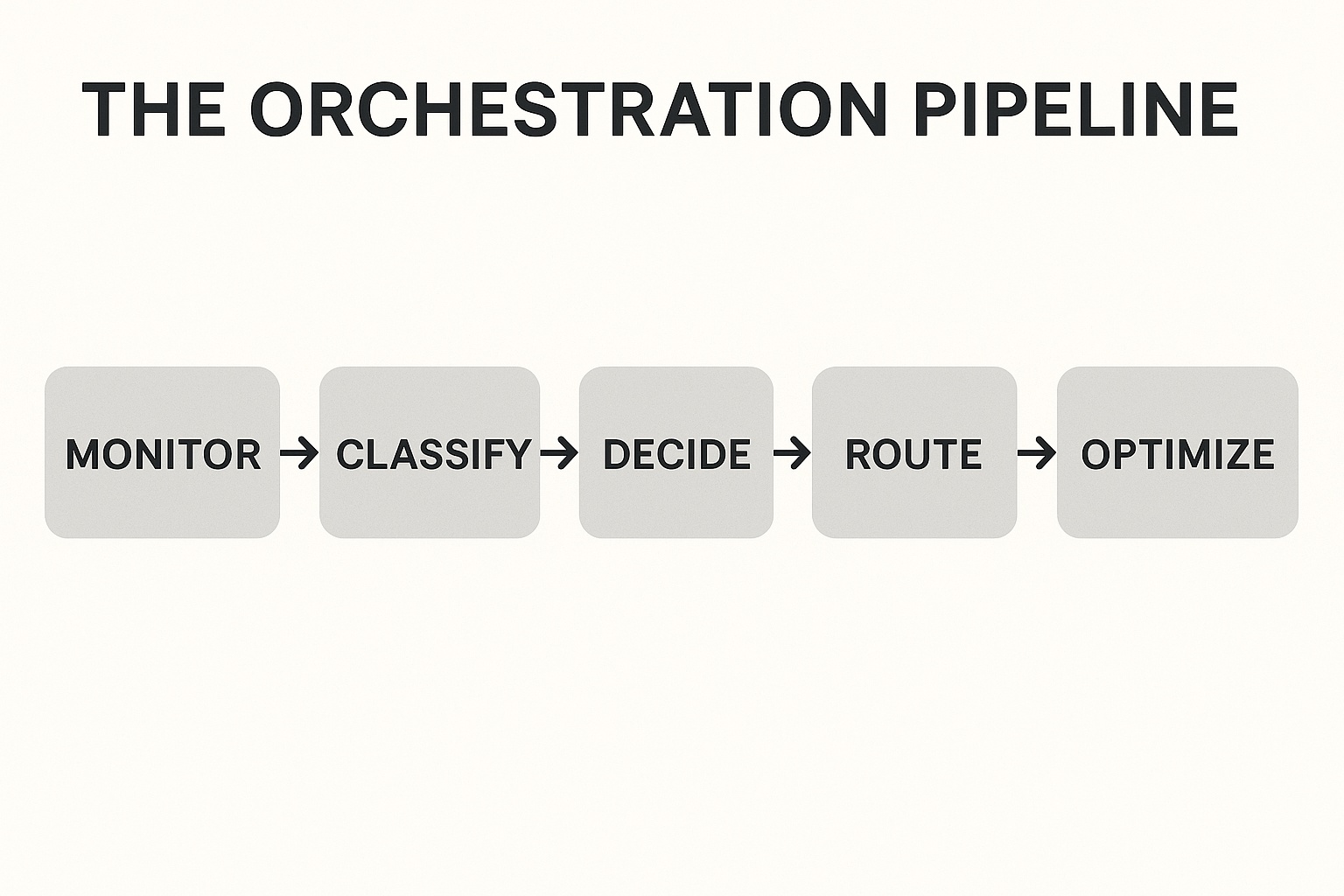

🛠️ Technical Strategies for Adaptive Routing

Building adaptive AI infrastructure requires more than load balancing. It demands an intelligent orchestration layer:

- Real-time performance monitoring : Track latency, uptime, and error rates across providers.

- Query classification : Use embeddings or ML to categorize queries by complexity, domain, and intent.

- Decision engines : Weigh trade-offs (speed vs cost vs accuracy) and assign dynamically.

- Hybrid orchestration : Run queries across multiple models and merge results for stronger, more reliable outputs.

📈 Industry Use Cases

Adaptive routing has transformative implications across industries:

Healthcare

Patients get faster triage and more reliable diagnostic support. Patient queries are routed to lightweight models for instant responses, while complex diagnostic or research tasks are escalated to reasoning-heavy LLMs.

Finance

Analysts reduce time spent on compliance research, improving decision-making speed. Lightweight models handle routine checks, while high-accuracy models handle regulatory or market research tasks to minimize risk.

Customer Support

End users experience instant resolutions with smooth escalation paths. Common queries are instantly answered by cost-efficient models, while more complex issues are routed to advanced reasoning models.

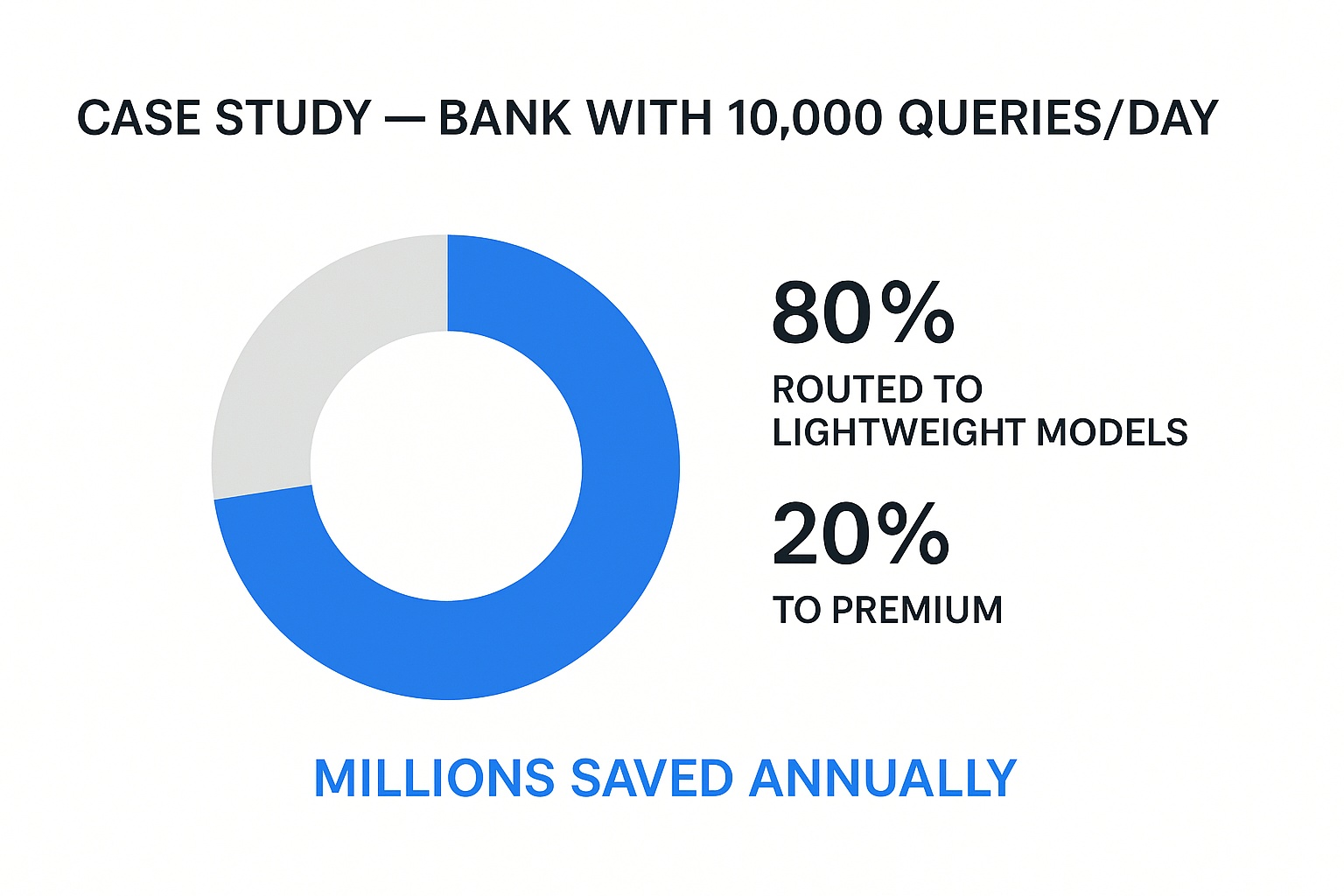

🚨 Real-World Example:

When a global bank receives 10,000 compliance queries daily, adaptive

routing ensures 80% are handled instantly by lightweight models, while

the remaining 20% escalate to high-accuracy LLMs for more detailed

analysis. This dynamic approach saves the bank millions annually in

operational costs while maintaining regulatory compliance and service

quality.

These examples show how AI model orchestration adapts to domain-specific

needs, ensuring quality without over-spending.

🚀 Why It Matters

In a world of rapidly evolving AI models, businesses that rely on static routing will fall behind. Adaptive AI ensures applications stay competitive, efficient, and future-proof.

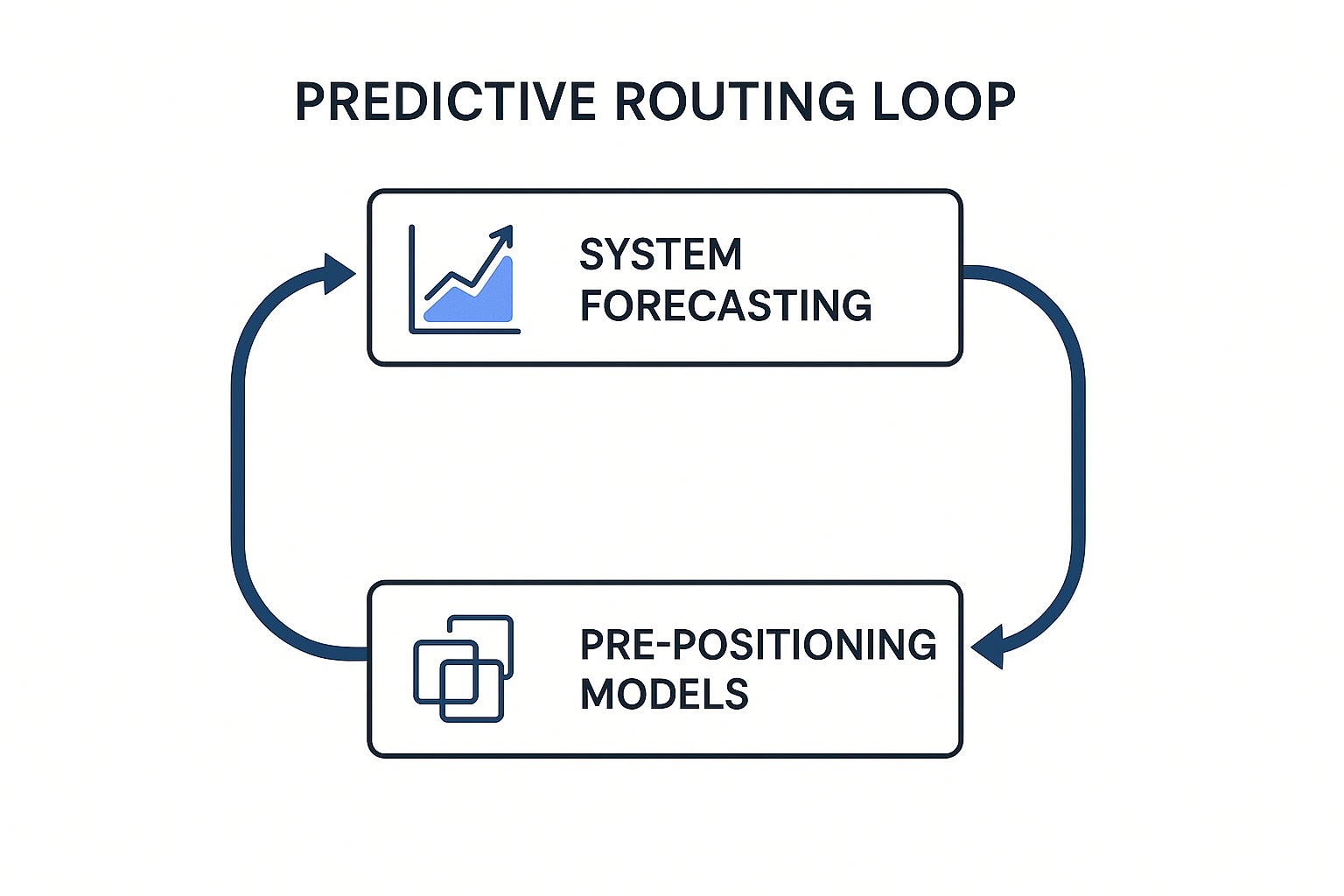

🔮 Looking Ahead: Predictive & Contextual Routing

The next frontier is predictive and contextual dynamic model switching. Adaptive systems won’t just react, they’ll anticipate:

- Shift traffic before slowdowns occur.

- Predict query types and pre-select optimal models.

- Use contextual memory to adapt based on user behavior patterns.

This creates self-optimizing AI systems that grow smarter with every interaction.

✅ Conclusion

Speed, cost, and resilience will always matter in AI. But the next leap

forward is adaptability.

The explosion of foundation models doesn’t have to create confusion.

With Kumari AI, the choice is always automatic, adaptive, and right for

the moment.

At Kumari AI, adaptability is more than a principle, it’s our

competitive edge. Unlike static pipelines, our orchestration

continuously learns from usage data, improving routing accuracy over

time. Our decision engine monitors real-time model health, classifies

queries, and intelligently routes tasks, ensuring the right model at the

right moment.

👉 As new models continue to emerge, businesses don’t need to worry

about choosing the wrong one, Kumari AI’s adaptive AI infrastructure

ensures the best model is always chosen in the moment.

If you want your applications to be smarter, faster, and future-ready,

explore how Kumari AI can power your next breakthrough → kumari.ai

📌 Key Takeaways

- Static routing is limited and wastes resources.

- Adaptive AI dynamically selects the best model for each query.

- Benefits: better quality, personalization, and smarter allocation.

- Strategies: monitoring, classification, decision engines, orchestration.

- Future: predictive and contextual self-optimizing routing.