Rethinking AI: When the System Picks Its Own Architecture

Artificial intelligence is evolving faster than most of us ever

imagined. But here’s a thought: what happens when AI doesn’t just run on

models we’ve built but starts shaping its own design?

At Kumari AI, we’ve already built systems that orchestrate across

multiple models, intelligently routing prompts to the one that’s best

suited. But the horizon is shifting. Imagine a world where AI doesn’t

just select between models, it actively builds or adapts its own

architecture in real time to fit the task at hand.

From Routing to Self-Selection

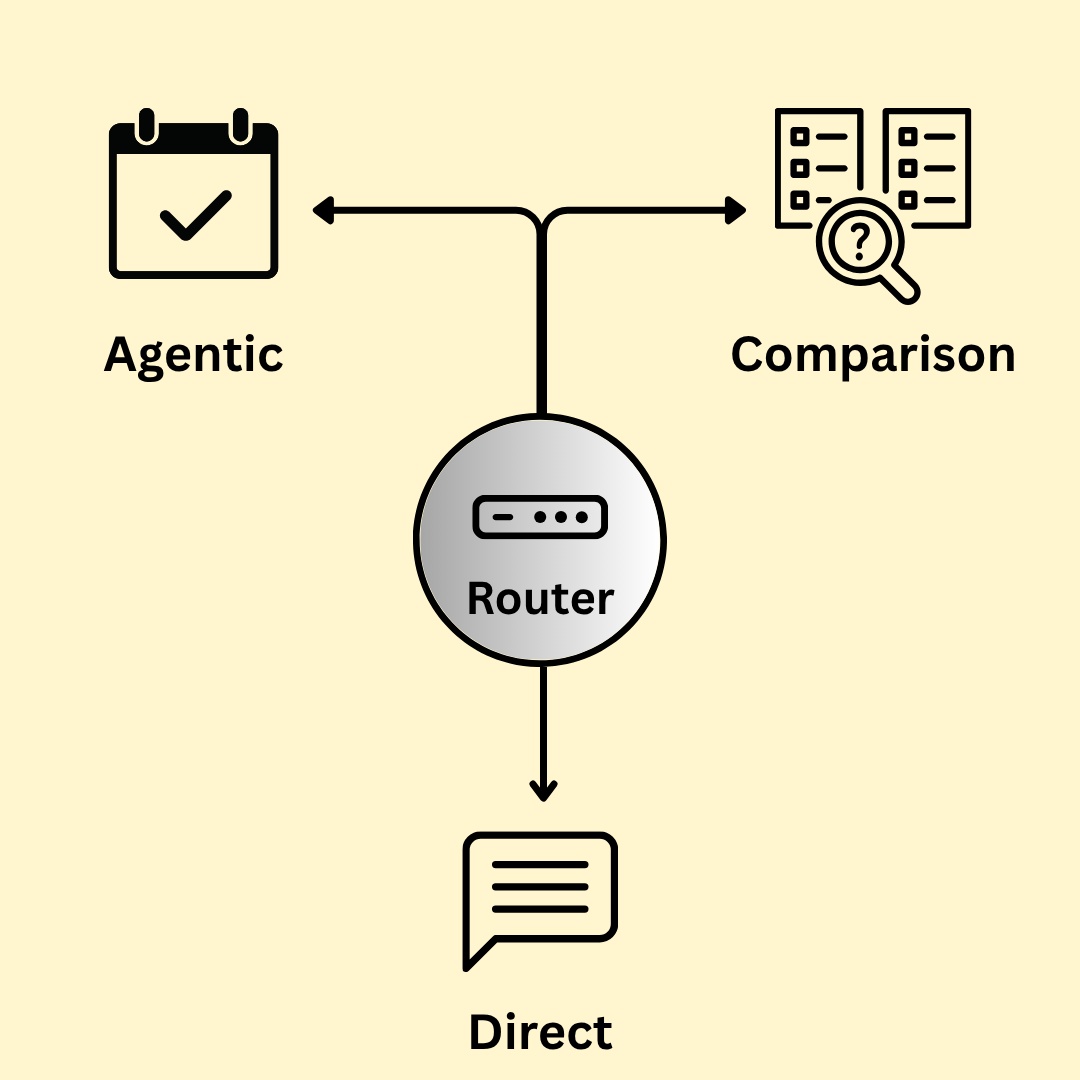

Today, multi-model orchestration means routing between existing choices, balancing cost, latency, and accuracy to pick the best-fit LLM. That’s what powers Kumari AI.

But imagine if AI could go beyond that, restructuring its own “brain” on the fly. Not just OpenAI here, Anthropic there, but entirely new architectures forming at the moment, designed to solve the exact challenge it faces.

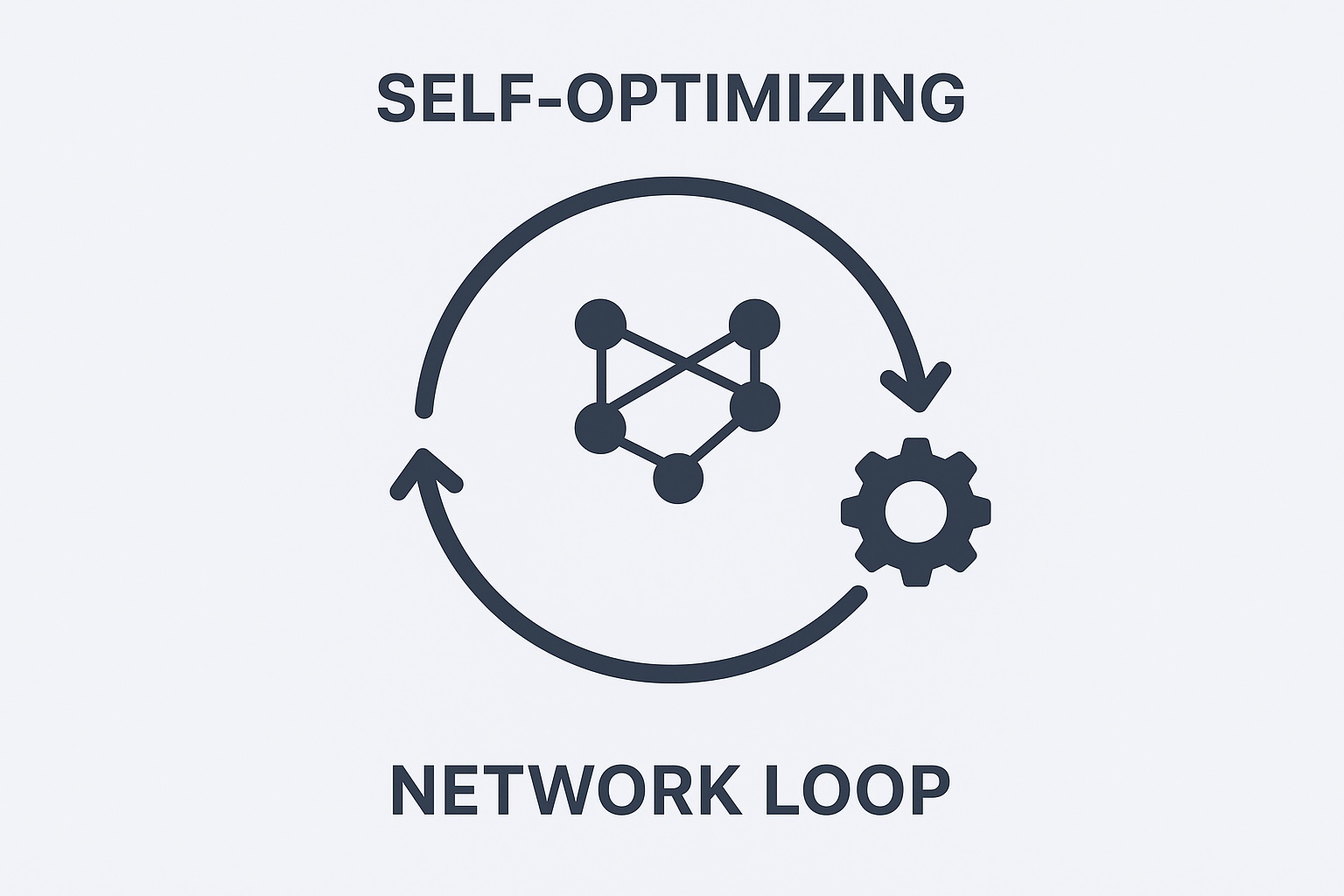

The Path Toward Self-Improvement

This is where the idea of recursive self-improvement comes in: AI that

continuously refines itself without waiting for human updates.

Picture a system that tweaks its own hyperparameters, experiments with

new neural pathways, or spins up lightweight modules built specifically

for the workload in front of it.

The concept of self-optimizing models is no longer just science fiction.

Research is already pointing in this direction, with promising evidence

that systems can evolve their architectures in real time.

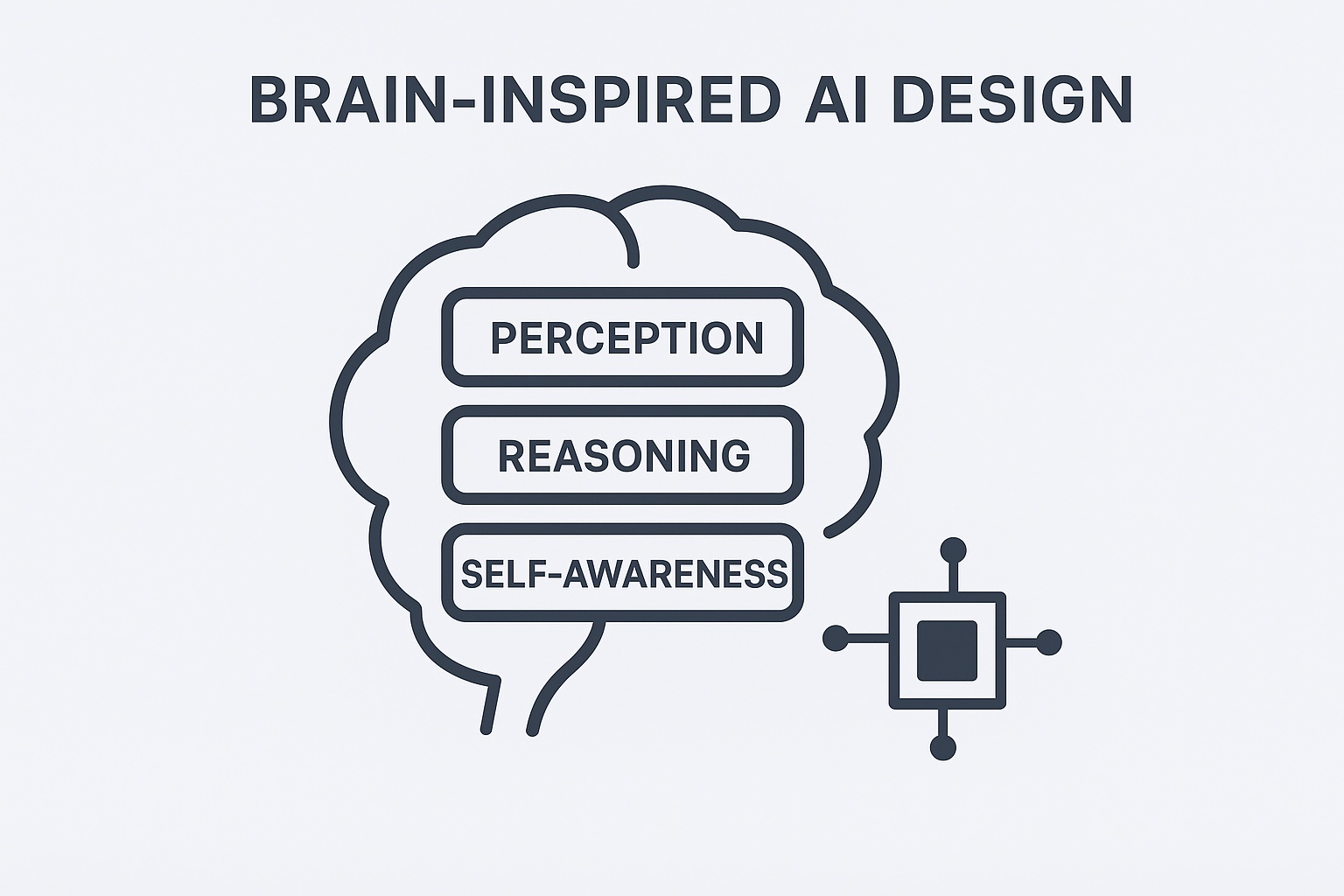

Tracking Brain-Like Blueprints

Neuroscience is playing an unexpected role in this story. Researchers

are designing brain-inspired architectures that go beyond simple

mimicry, layering perception, reasoning, and even self-awareness into

new AI systems.

Some approaches go further, proposing hierarchies of “self”, from

sensing the physical world to navigating social and conceptual

understanding.

At Kumari AI, these aren’t just fascinating theories. They shape how we

think about orchestration that’s not only adaptive but also safe,

explainable, and grounded in human-centered design.

Digital Twins and Neural Simulations

One of the most exciting breakthroughs comes from neuroscience labs.

Scientists have created digital twins of the mouse visual cortex,

training AI models on neural activity so precisely that they can predict

how real neurons respond to new stimuli.

Now imagine scaling that to humans. As data collection improves, digital

twins of human brain processes could guide AI toward architectures that

don’t just imitate intelligence but mirror the strategies our brains use

naturally.

This could open the door to AI that self-constructs architectures,

systems optimized for efficiency but grounded in neural architecture

search inspired by biology.

Ethical and Technical Frontiers

But with great potential comes great complexity.

If an AI can build and adapt its own architecture, it could evolve in

unpredictable ways, raising questions of transparency, alignment, and

control. That’s both exciting and risky.

We’ll need new governance frameworks: transparency tools, explainability

mechanisms, and oversight structures that ensure self-directed AI

remains aligned with human goals rather than racing ahead unchecked.

What It Means for Kumari AI

For Kumari, the vision of self-selecting and adaptive architectures opens up powerful possibilities:

- Ultra-specialized responses: Sub-networks tailored in real time, producing more accurate, nuanced, and context-sensitive answers.

- Efficiency on demand: Instead of running massive models, AI could assemble leaner architectures that use just enough compute for the task.

- Continuous evolution: Our routing engine wouldn’t just select, it would learn how to design better paths over time.

Of course, this vision requires new orchestration layers: introspection tools, transparency dashboards, and safeguards to keep self-built architectures aligned, safe, and explainable.

Final Thought

As Kumari AI pushes the boundaries of multi-model orchestration, the

next frontier is clear: systems that don’t just route between models,

but craft entirely new neural frameworks of their own.

It’s a bold step, blending neuroscience insights with adaptive AI design

to unlock unprecedented flexibility. But it’s also a step that must be

taken with equal measures of innovation and caution.

🔑 Key Takeaways

- AI may one day design its own neural architectures, moving beyond pre-trained models.

- Concepts like recursive self-improvement, adaptive AI, and neural architecture search are driving this vision.

- Digital twins and neuroscience-inspired frameworks could guide the next generation of AI design.

- Governance and oversight will be crucial to ensure self-optimizing models remain safe and aligned.

- Kumari AI is building toward this future with its orchestration-first approach.

Join the Journey

At Kumari AI, we’re exploring these possibilities in real time. Follow our journey as we shape the future of adaptive AI and multi-model intelligence is smarter, safer, and more innovative than ever before.

👉 Try Kumari AI Beta and experience the future of intelligent orchestration today.